AI PDFing in 2025? Claude's your guy

This is the post I wish I found the last 2 times I did this research.  #ai #claude #llm #pdfs #tooling #automation

#ai #claude #llm #pdfs #tooling #automation

Whether you’re improving your existing AI toolkit, or you’re proving the value of AI for your company, if you’ve got PDFs as part of the input data, I expect this will be valuable.

tl;dr

As of July 2025, the best way to analyze a PDF is via Anthropic’s API. Crazy easy, 1 API call, base64 PDF data and your prompt. The accuracy is fantastic, and it’s hilarious to compare the results of trying the same thing with openai. I had a hard time finding conclusions like this outside of forum posts, so hopefully this is useful to you for the next 6 months while it remains true ☺️.

If you need a reason to trust my opinion, or you’re curious, read on…

How I got here

More than a year ago I started a project to introduce our first AI driven feature and after a lot of testing, research into AI APIs, and research into our business problems, I decided on extracting structured data from PDFs. TL;DR from then, I needed to prove the ROI as clearly as possible and as the first person putting my neck out I was extremely focused on making it as small a slice as possible. At the time, you could put a PDF into chatgpt and it would be okay usually, and you could put a PDF into the API and it would be bad.

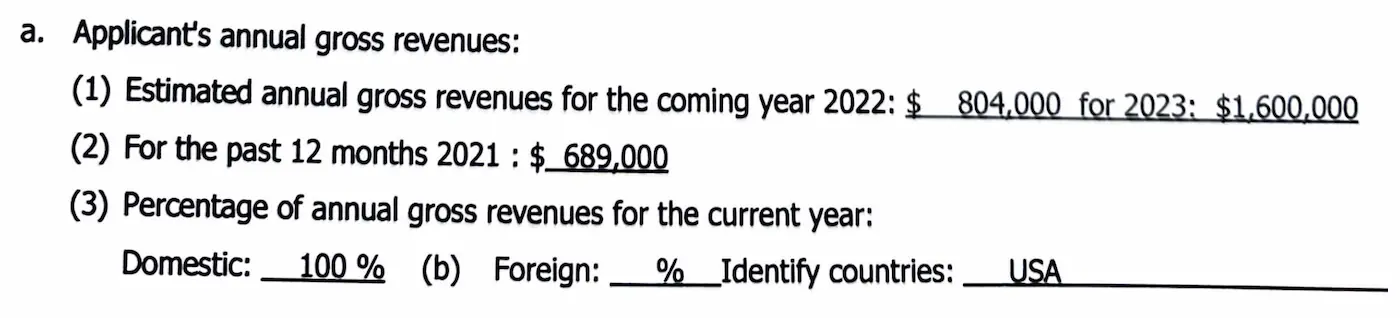

Example PDF with a chunk of a scanned form that’s been copied and is misaligned on the page. Reading Forms = $$

I ran experiments with every foundation model, extracting PDF content locally (PDFMiner, etc.) and pushing raw text as part of the prompt, I tried providers like AWS Textract, and Unstract (note: I actually thought their service was quite nice), and had a range of results. I was swimming in Google colab docs trying all of this. Ultimately, the strategy that yielded the best results, and fit my cost+time constraints was cumbersome, but effective. In November 2024, I would’ve recommended cutting the PDF by page, and converting each page to a jpeg, and then uploading those as files with the prompt. The multi-modal models at the time did a pretty excellent job with images and text, as well as getting the benefit of identifying images by page number in the responses. This is all to say, I spent a lot of time making that decision, and we did ship a production, profitable, compelling product that opened the door for more AI projects.

I’ve moved companies since then and we come across a somewhat similar usecase (funny enough it’s also driven by how confusing insurance can be) and I needed to refresh myself on that research. In November 2024, Anthropic had a beta feature to ease PDF analysis by basically doing the same PDF->Image process behind the scenes for you, but I didn’t have the bureaucratic temperament or time to get us approval for anthropic. Since then, it seems like that has gone GA and wow. Life. Is. Good.

What took me weeks for a PoC, and months to get it in production (not a fault of the tech), took me a week this time and roughly 1 day to be happy with the accuracy of the AI analysis.

Why It Matters

- PDFs are everywhere: policies, contracts, intake forms.

- PDFs are also bullshit: structured PDF documents, word docs as PDF, PDFs of scanned images of printed forms, it’s all “PDF”.

- Sometimes a hammer is the right tool: We give-up on deterministically converting PDF structure to text reliably and just analyze the image.

Vendor specific without lock in

As much as I’m an Anthropic fanboy, Anthropic did us a really nice favor by making this feature completely transparent. You don’t need to use a special API endpoint, you don’t need to upload the file to their magic assistant API before using it in your prompt, it’s just a single normal API call. If another provider offers a better solution, you won’t be boxed in. The curl PDF example they give is really what you want. We use Elixir, and converting the curl command to a Req request is so easy, no provider client needed or AI framework, it’s just a web request.

Don’t take it from me, here’s an example

It’s easy to test and confirm that Anthropic is the best with PDFs, not only because the quality of life is so much better (see example code), but the quality of the results is there too. Credit to: Anthropic PDF Support | You can download the sample image as a PDF here.

The PDF was ~2k input tokens, claude-sonnet-4-20250514 is $3.75/Mtokens, so we are looking at just under 1 cent(~$0.0075).

base64 -i pdfSample.pdf | tr -d '\n' > pdf_base64.txt

# Create a JSON request file using the pdf_base64.txt content

jq -n --rawfile PDF_BASE64 pdf_base64.txt '{

"model": "claude-sonnet-4-20250514",

"max_tokens": 1024,

"messages": [{

"role": "user",

"content": [{

"type": "document",

"source": {

"type": "base64",

"media_type": "application/pdf",

"data": $PDF_BASE64

}

},

{

"type": "text",

"text": "Give me a 1 sentence answer or less, what is a revenue value from this document?"

}]

}]

}' > request.json

# Send the API request using the JSON file

curl https://api.anthropic.com/v1/messages \

-H "content-type: application/json" \

-H "x-api-key: $ANTHROPIC_API_KEY" \

-H "anthropic-version: 2023-06-01" \

-d @request.json

Bonus

Try converting a weird scanned form today and see if Claude can beat your current stack. It’s genuinely as easy as it looks.

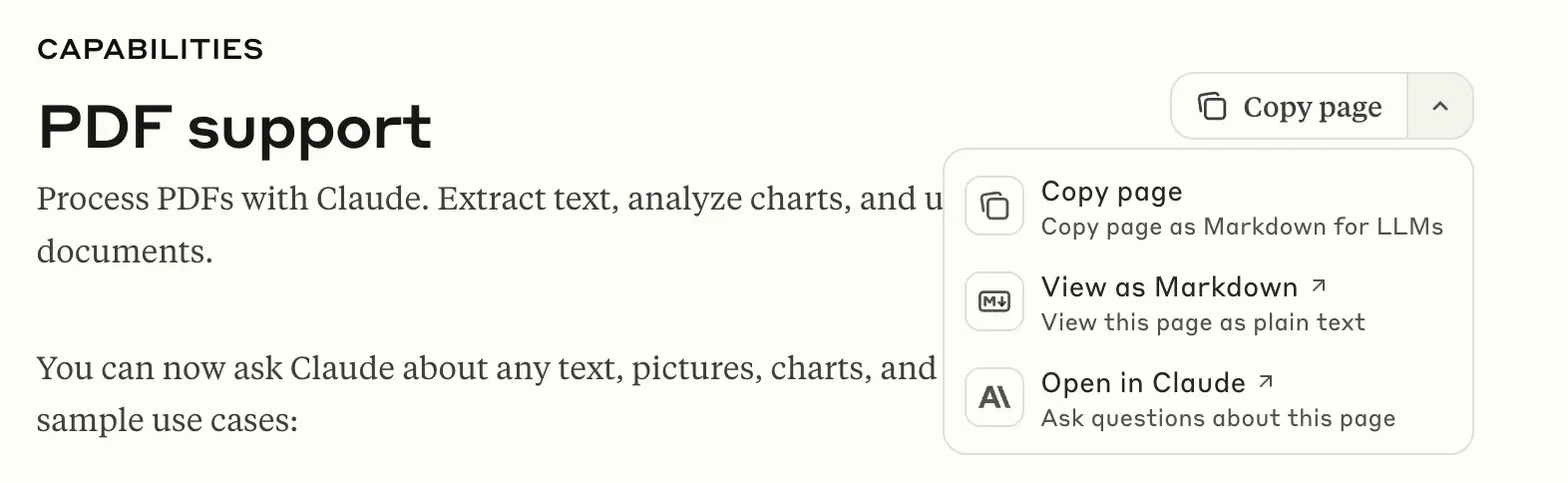

To my AI first thinkers, the Anthropic docs even have a button to get the page as markdown. I usually give the URL to claude code and let it scrape however it wants, but if you’re building a local doc center for stuff like this, you can just give the docs to your AI of choice and ask for an implementation in your language and code style.

Image of the Anthropic docs header, including a “copy” button with dropdown for format of copy for LLM convenience